- 24/7 Free Consultation: (888) 424-5757 Tap Here To Call Us

Roblox Sexual Abuse Lawsuits

Nationwide Legal Representation for Victims of Online Child Exploitation

Families across the country are pursuing Roblox sexual abuse lawsuits after learning that predators used Roblox and connected apps like Discord to groom, exploit, and harm children. At Rosenfeld Injury Lawyers, we are leading efforts to hold technology companies accountable when they fail to protect minors, and we are here to stand with families seeking answers, justice, and meaningful reform.

Children and teens deserve safe online spaces, especially on platforms designed and marketed for young users.

Yet, disturbing patterns of grooming, coercion, and sexual exploitation have emerged across Roblox, exposing major failures in content moderation, age verification, and user safety systems. Many victims and parents had no idea the danger existed until the unimaginable happened.

If your child was targeted, manipulated, or exploited on Roblox or Discord, our dedicated legal team can help. Our sexual abuse lawyers combine trauma-informed advocacy with deep experience in child sexual abuse litigation to guide families through every step of the legal process.

You can trust us to listen, to protect your child’s privacy, and to fight aggressively for accountability and compensation, so your family can begin to heal and move forward.

Roblox Lawsuit Developments

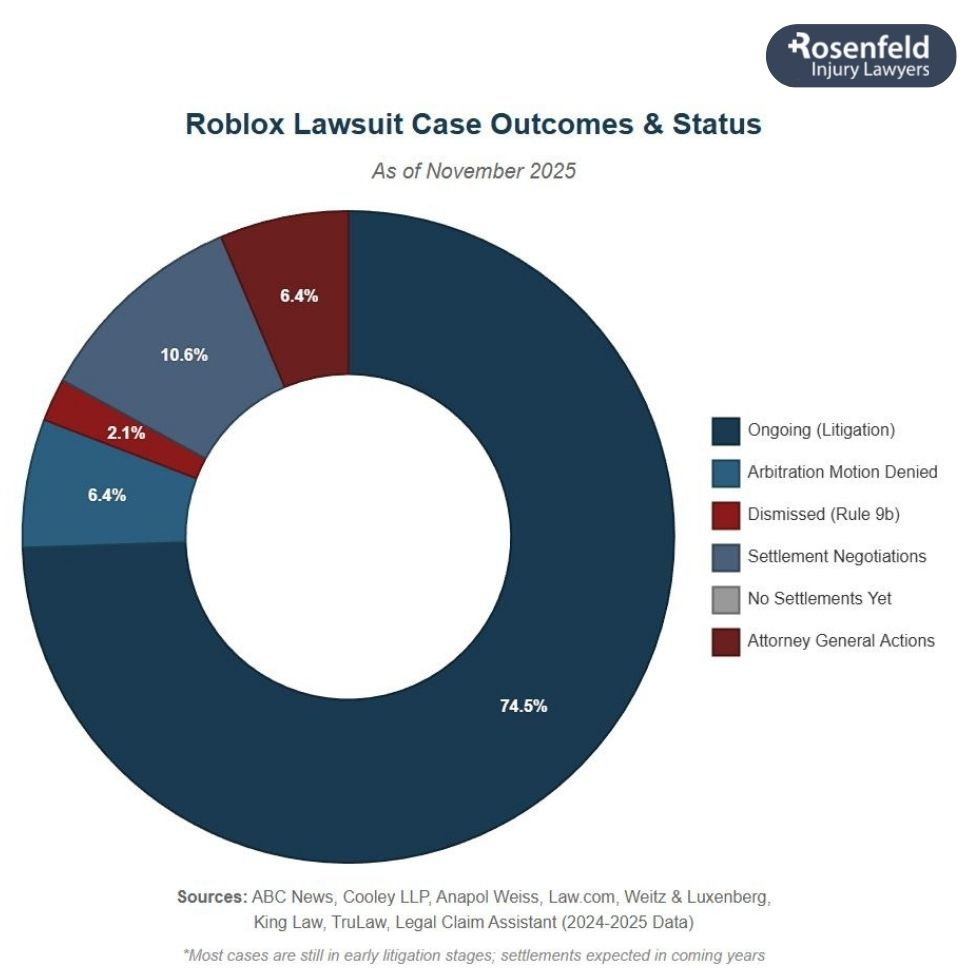

Across the country, a growing number of Roblox sexual abuse lawsuits are revealing the platform’s deep failures to protect young users from grooming and child sexual exploitation.

Families, state officials, and federal investigators have uncovered disturbing evidence that predators used the online gaming platform to contact, manipulate, and exploit minors, often through private chats and connected Discord channels.

The following timeline highlights some of the most significant legal actions, government investigations, and class action lawsuits filed against Roblox Corporation and Discord Inc. for their alleged role in enabling sexual abuse and distributing explicit content involving children.

September 2025 – Roblox and Discord Child Sexual Exploitation MDL

As these lawsuits multiplied, the U.S. Judicial Panel on Multidistrict Litigation consolidated dozens of related federal cases under MDL-3166. This centralized litigation, now pending in federal court, accuses Roblox and Discord of creating unsafe environments that facilitated child sexual exploitation and psychological harm.

The MDL allows families nationwide to coordinate discovery, share evidence, and pursue accountability together.

August 2025 – Louisiana Attorney General v. Roblox Corporation

In August 2025, Louisiana Attorney General Liz Murrill filed a groundbreaking lawsuit accusing Roblox Corporation of enabling large-scale child grooming and sexual exploitation. The complaint alleges that the online gaming platform ignored internal safety warnings, misled parents, and failed to implement even the most basic protections for young users.

According to the lawsuit, Roblox knowingly allowed its platform to host and distribute sexually explicit content involving minors, creating what investigators described as a “distribution hub” for harmful material. Prosecutors argue that Roblox prioritized profits over child safety, leaving children vulnerable to predatory interactions.

August 2025 – California Mother v. Roblox & Discord

That same month, a California mother filed suit against Roblox and Discord, alleging that a 10-year-old girl was sexually exploited by an adult posing as a peer. The predator contacted the child through in-game chats, sent explicit photos, and manipulated her into sending images in return.

The lawsuit alleges that both companies failed to detect or stop the ongoing abuse despite their marketing claims of safe, moderated environments for young users.

August 2025 – People v. Matthew Macatuno Naval

Also in August, authorities charged Matthew Macatuno Naval, 27, after he abducted a 10-year-old girl he met on Roblox and continued communicating with her through Discord. He obtained her address, drove across the state, and took her hundreds of miles away before law enforcement recovered her safely.

A related federal lawsuit names Roblox and Discord for failing to enforce safety features or restrict direct messaging between minors and adults.

August 2025 – Georgia Lawsuit Alleges Insufficient Parental Controls

A Georgia family filed suit on behalf of their 9-year-old child, who was contacted by multiple adults pretending to be children. The predators sent sexually explicit messages and coerced the victim into sending images. When the child tried to stop, they threatened to share the photos publicly.

The lawsuit alleges that Roblox’s moderation and parental controls were insufficient, allowing the abuse to continue unchecked.

July 2025 – Multi-State Grooming Cases Involving Online Predators

In July, two separate Roblox sexual abuse lawsuits were filed after a 13-year-old girl was taken across state lines, and a 14-year-old Alabama girl was lured from her home. Both cases involved predators who first gained trust through Roblox and then moved conversations to Discord before attempting in-person meetings.

Authorities intervened before further harm occurred, citing Roblox’s lack of monitoring of direct messages and off-platform contact.

May 2025 – Christian Scribben Arrest and Related Lawsuits

Federal agents arrested Christian Scribben in May 2025 for using Roblox and Discord to groom and exploit children as young as eight. Victims were instructed to create sexually explicit photos and videos, which were distributed through Discord servers.

Multiple Roblox lawsuits claim the company failed to remove Scribben’s accounts despite repeated user reports and ignored early warning signs of systemic exploitation.

May 2025 – Texas Girl Case and Related Filings

A family sued after a 13-year-old girl was groomed and assaulted by a predator she met on Roblox. According to the lawsuit in Texas, the man used Discord to locate her home and record the assault.

Additional lawsuits filed in San Mateo County, California, and Georgia that month involved minors coerced into producing child sexual abuse material through Roblox’s private chat system. One of these cases involved a 16-year-old Indiana girl trafficked to Georgia and assaulted after meeting her abuser through Roblox.

April 2025 – Multiple Lawsuits Against Roblox and Discord

Two April 2025 lawsuits highlighted the same recurring pattern: predators initiating contact through Roblox private messages and moving victims to Discord for coercion.

One involved Sebastian Romero, accused of exploiting at least 25 minors, including a 13-year-old boy whom he manipulated into sharing sexually explicit images. Plaintiffs allege both companies failed to verify user ages or monitor unsafe environments despite known risks.

2024 – National Investigation and Developer Involvement

A nationwide investigation uncovered extensive child exploitation occurring across both Discord and Roblox. Among the most disturbing cases was Arnold Castillo, a Roblox developer accused of trafficking a 15-year-old Indiana girl across state lines. During her eight-day captivity, she was repeatedly sexually abused and referred to by Castillo as a “sex slave.”

The investigation also documented other incidents, including a registered Kansas sex offender contacting an 8-year-old through Roblox and an 11-year-old New Jersey girl abducted after being groomed on the platform.

October 2022 – California Class Action Lawsuit

A California class action lawsuit filed in 2022 named Roblox Corporation, Discord Inc., Snap Inc., and Meta Platforms Inc. as defendants for enabling the online exploitation of a teenage girl.

Plaintiffs claim that adult men used these interconnected online platforms to manipulate the minor into sharing explicit material and engaging in dangerous behavior while the companies failed to verify ages or restrict contact between adults and minors.

2019 – Early Warning Case of Child Sexual Abuse on Roblox

One of the earliest lawsuits against Roblox dates back to 2019, when a California mother discovered her son had been groomed by an adult user. The perpetrator persuaded the child to send sexually explicit images, highlighting early warnings about the Roblox platform’s vulnerability to abuse.

What Are the Biggest Child Safety Concerns Tied to Roblox?

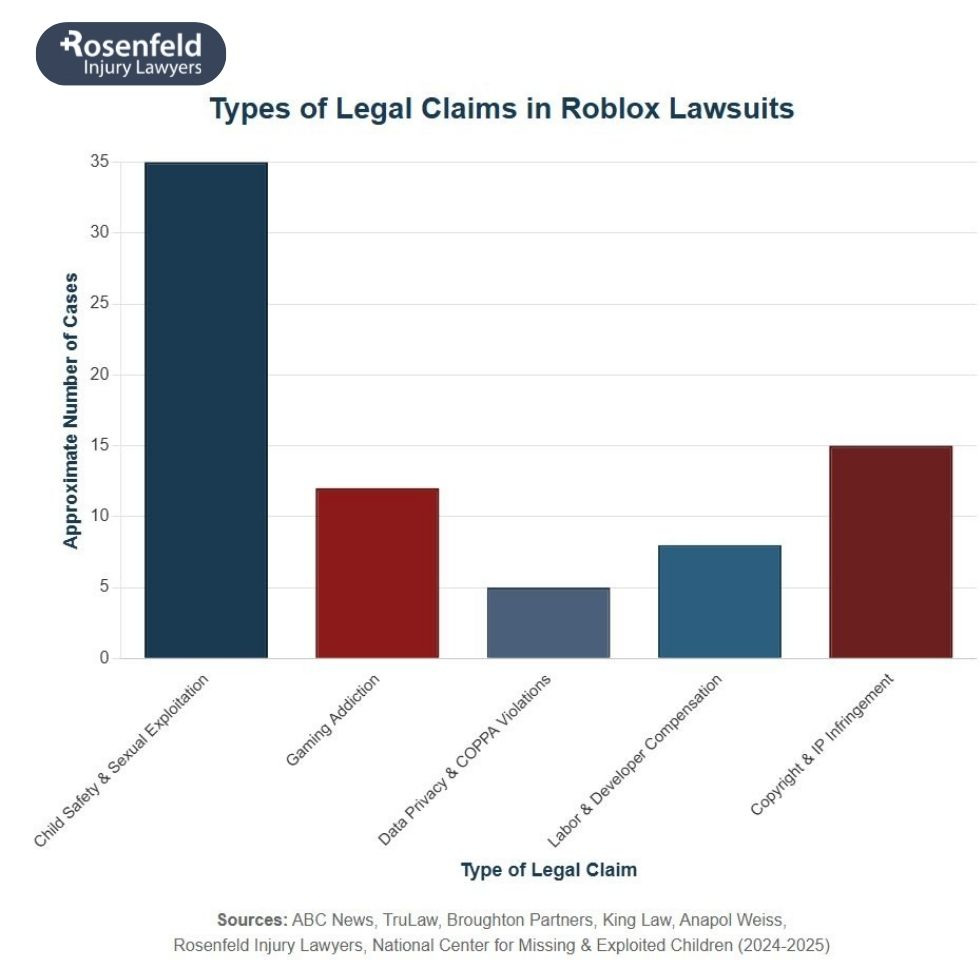

As one of the most widely used online gaming platforms, the Roblox platform continues to face scrutiny for serious lapses in child safety. Dozens of Roblox sexual abuse and exploitation lawsuits claim that predators exploited weak safeguards, poor moderation, and misleading design choices that left young users vulnerable.

Families say the company failed to protect children from explicit images, unsafe in-game chats, and the spread of inappropriate content, leading to devastating emotional and psychological harm. Below are five major safety breakdowns cited in ongoing legal actions against Roblox.

Unfiltered Chats That Invite Predators

Chat and messaging tools remain one of the most dangerous aspects of the Roblox platform. Instead of providing a fully moderated experience, Roblox allowed users—including adults—to exchange sexually explicit messages, send explicit images, and share links to other platforms.

Many Roblox lawsuits claim that predators used these in-game chats to build trust and move victims off-platform, exposing them to sexual abuse and grooming. Despite repeated warnings, Roblox allegedly failed to implement effective safety features to ensure online safety for minors.

Dangerous Games Disguised as Play

Roblox’s user-created content has long been central to its appeal, but it has also opened the door to exploitation. Numerous lawsuits and investigations allege that the company allowed games containing sexually explicit content, sexual acts, and other harmful content to remain live for months.

Plaintiffs argue these experiences encouraged minors to share explicit photos or interact with sexual predators under the guise of gameplay. Critics say this failure to enforce moderation standards shows a disregard for child safety and creates an unsafe environment for millions of vulnerable users.

Weak Age Verification That Enables Exploitation

One of Roblox’s most criticized flaws is its reliance on self-reported birthdates with no meaningful identity checks. This loophole allows adults to impersonate children and engage with minors directly. In several Roblox sexual abuse lawsuits, predators exploited this gap to groom victims and solicit sexually explicit images.

Attorneys argue that basic safeguards—such as verified parental consent, facial age estimation, and tighter parental controls—could have prevented many of these tragedies. Plaintiffs continue to call for the company to hold Roblox accountable for failing to adopt proven tools that protect minors.

Slow and Ineffective Moderation of Inappropriate Content

Another recurring issue in the Roblox lawsuits filed nationwide is the company’s sluggish and inconsistent content moderation. Reports indicate that explicit content, predatory accounts, and offensive games often remained accessible long after being reported.

Critics allege that Roblox prioritized user growth and profits over child safety, allowing unsafe material to spread unchecked. Families affected by these failures describe lasting emotional distress, saying Roblox’s delayed action made it easier for sexual predators to exploit children.

Off-Platform Communication That Fuels Exploitation

Many of the most serious grooming incidents began on Roblox but escalated through Discord or other messaging apps. The company’s social features and in-game prompts often encourage players to communicate beyond Roblox, where oversight is minimal.

Multiple lawsuits now claim that this lack of restriction enabled predators to contact and manipulate children privately. Plaintiffs argue that Roblox’s design choices directly contributed to the rise in child exploitation cases, creating real-world consequences and lifelong trauma for victims.

Legal Grounds for Roblox Sexual Abuse Lawsuits

Families filing a Roblox lawsuit allege that Roblox failed in its fundamental duty to protect children from foreseeable harm. These cases focus on how both companies allowed child exploitation to occur on platforms marketed as safe spaces for minors.

Plaintiffs argue that corporate negligence, poor oversight, and misleading assurances enabled widespread abuse that caused severe emotional distress and long-term psychological harm.

Civil claims against these technology companies are grounded in both state and federal law.

Negligence and Corporate Misconduct

A growing number of plaintiffs across the United States claim that Roblox Corporation and Discord Inc. ignored years of internal safety warnings about online predators and unsafe chat features. Each complaint alleges that the platforms prioritized profits and engagement over user welfare, leaving children sexually exploited.

Families pursuing these legal actions argue that Roblox’s design choices—such as open chat systems and unverified accounts—made exploitation predictable and preventable.

Defective Platform Design and Unsafe Features

Many lawsuits assert that the gaming platform itself was defectively designed. By failing to implement stronger moderation tools, reporting systems, and remote account management options for parents, Roblox allegedly allowed predators to use its environment to exchange or share explicit images with minors.

Plaintiffs contend that these design failures turned what should have been a child-friendly experience into a global risk for grooming and trafficking.

Violations of Federal Child Protection Laws

Numerous lawsuits reference the Child Online Privacy Protection Act (COPPA) and the Trafficking Victims Protection Act (TVPA), two federal laws aimed at preventing child exploitation online.

Attorneys for victims claim that Roblox’s and Discord’s business models encouraged user growth without adequate screening, leading to sexual content and grooming that violated these statutes. These claims seek to hold both corporations liable for ignoring known dangers within their ecosystems.

False Advertising and Consumer Deception

Parents say Roblox misrepresented their platforms as “safe for kids” while allowing predators to operate freely. This deceptive marketing forms the basis of additional claims for consumer fraud and negligent misrepresentation.

Families argue that the companies knowingly concealed the risks of inappropriate content and unsafe interactions, persuading millions of parents to trust a system that failed to deliver on its safety promises.

Failure to Warn or Intervene

Another common allegation is that Roblox failed to warn parents about the high risk of exploitation or to remove dangerous users once reports were filed. Each Roblox lawsuit filed under this theory argues that the company had access to moderation data and chat logs showing predatory patterns but refused to act.

This inaction, families claim, directly contributed to the abuse and compounded their children’s emotional distress.

Section 230 and the Limits of Immunity

Defense attorneys often cite Section 230 of the Communications Decency Act, which shields online companies from liability for user-generated content. However, plaintiffs maintain that Roblox went far beyond passive hosting by designing interactive features that enabled and monetized unsafe behavior.

Our attorneys find that this conduct strips them of legal immunity and allows families to pursue meaningful accountability through civil legal action.

Who Can File a Roblox Sexual Abuse Lawsuit?

Families whose children were harmed may qualify to bring a Roblox lawsuit if exploitation or grooming occurred through these platforms. These cases often involve minors exposed to sexual content shared by adults using the company’s systems.

You may be eligible to pursue a claim if:

- A child experienced online grooming or child exploitation through Roblox or its connected chat features

- Reports of abuse or suspicious activity were ignored or inadequately handled by Roblox or Discord

- Your family suffered emotional, financial, or physical harm after a predator used the platform to contact your child

Parents and legal guardians can file on a child’s behalf, while survivors who have reached adulthood may also seek compensation independently. Our experienced team of Roblox lawsuit lawyers can explain your rights, help you pursue justice, and guide you through potential Roblox settlements or ongoing litigation.

What Damages Can Victims Recover?

Victims of abuse and exploitation connected to Roblox may be entitled to financial compensation through a Roblox lawsuit. These claims address not only the trauma caused by online grooming but also the lasting financial and emotional toll on families.

Compensation may include:

- Medical and therapy expenses for treatment related to trauma, anxiety, or long-term recovery

- Lost income or educational setbacks tied to the impact of the abuse

- Emotional distress and diminished quality of life

- Punitive damages when corporate negligence or willful misconduct contributed to the harm

How Rosenfeld Injury Lawyers Can Help You Take Legal Action

At Rosenfeld Injury Lawyers, our child injury lawyers represent families across the country in cases involving abuse and exploitation linked to Roblox. Our work focuses on holding major technology companies accountable when their platforms—once promoted as safe, popular online gaming platforms—put children at risk of real and lasting harm.

Our attorneys review evidence such as user communications, Roblox account records, and moderation logs to determine how failures in safety and oversight contributed to exploitation. We also collaborate with digital forensics experts to trace patterns of misconduct, including the use of Roblox’s in-game currency to target or manipulate minors.

We understand that these cases affect every aspect of a child’s life. Beyond legal strategy, our mass tort lawyers help families plan for recovery and long-term protection, ensuring that future harm is prevented.

Book a Free Consultation

At Rosenfeld Injury Lawyers, our class action attorneys handle every Roblox lawsuit on a contingency fee basis, meaning you pay nothing unless we recover compensation for your family. There are no upfront costs or hidden fees. Our firm covers the expenses of investigation, expert review, and litigation so you can focus on healing and protecting your child’s future.

If your family has been affected by abuse or exploitation through Roblox or Discord, contact us today for a free consultation. We’ll review your potential claim, explain your legal options, and help you determine the best path forward. Our personal injury attorneys are here to provide clarity, confidentiality, and the support you need to move toward justice.